In 2025, I was awarded Immersive Arts funding to explore the potential of creating multisensory digital landscapes that are designed to reveal the hidden – and sometimes mysterious – elements of natural ecosystems.

This ‘Explore’ funding has completely revolutionised my creative practice! I’ve experienced, and experimented with, a number of innovative digital technologies: virtual reality, stereoscopic 180° image capture, 3D animation, digital visual effects, photogrammetry, LiDAR, field sound recording, biological MIDI data and multichannel soundscapes. This research and testing has allowed me to develop a solid groundwork in the immersive arts that will act as a springboard for future creative experimentation.

One of the most significant impacts of this opportunity is that I now consider sound art to be an integral and essential part of my artistic practice. I’ve discovered that an environmental soundscape can increase the richness of the artwork to the extent that a visual landscape feels empty without it. I simply can’t imagine going back to making purely lens-based artworks now because sound is such a crucial part of creating immersive, ‘multisensory’ landscapes.

This webpage is a record (in reverse chronological order) of my exhilarating immersive arts journey.

After six months of research and development, I’ve reached a major milestone in my creative practice. I’ve made a VR180 video and played it through my VR headset, and I’m absolutely blown away by the end result! Seeing a much-loved, local landscape in a virtually environment was both surreal and incredibly moving. It made me feel so optimistic and enthusiastic about the potential of this immersive technology. So much so, that I’m already working a plan to create my first professional VR artwork!

My final equipment purchase for this project was a Meta Quest 3S headset, which I’m planning to use for testing and developing my VR180 video footage. So far, I’ve only used the headset to watch a few VR videos but I’ve been particularly inspired by David Attenborough’s Micro Monsters series.

The experience of seeing such detailed microscopic imagery at an enormous scale was truly fascinating. It’s made me think about the potential of turning my crystallised lichen specimens into giant trees in a magical, futuristic ecosystem. And thanks to my recent experiments with immersive arts technologies, I can actually visualise a way to accomplish this!

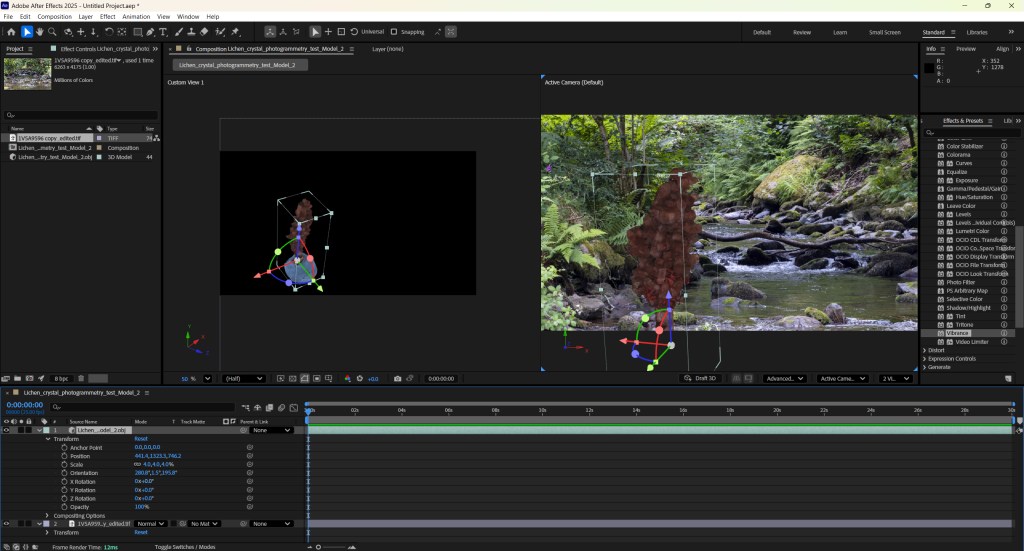

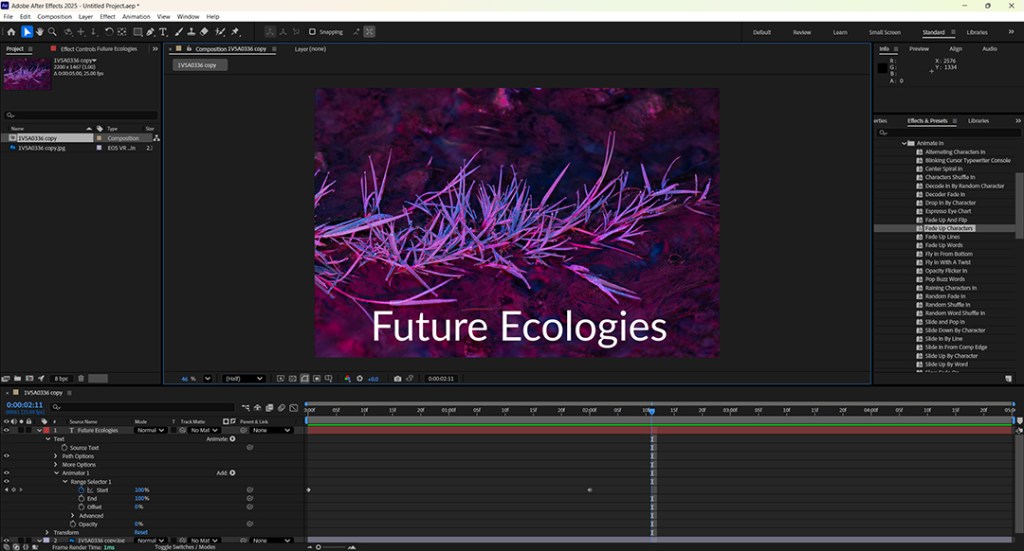

I’ve just finished the second of my Adobe After Effects training courses. This intermediate course covered working in 3D with After Effects cameras and lights, animating with expressions, rotoscoping, chroma keying and track mattes, and much more. It’s exciting to finally understand how to use the 3D tools in After Effects, which means that I can now position and animate 3D models. The potential of using these techniques within my creative practice is huge!

After a lot of online research, I’ve found a solution to my tripod problem for filming 180° video. I’ve now purchased a heavy-duty support arm that will allow me to extend my camera away from the tripod and therefore keep the legs out of the camera’s field of view. I’ve been testing this new set-up in my garden and now that I’m happy with everything, I’ve used my new equipment to create a 180° stereoscopic video of my latest exhibition, which could be viewed in a VR headset.

I recently exhibited a commissioned artwork, which drew upon the immersive arts skills I’ve been learning over the past few months. This commission was an exciting opportunity to put my new skills into practice. For example, I was able to create a sophisticated environmental soundscape using MIDI data and a multi-speaker set-up.

This event allowed for some interesting reflections and evaluation in terms of audience experience and response. Specifically, we collected comments cards as part of the evaluation and some of these were particularly valuable for assessing my professional development. For example:

“Loved the immersive feeling of sound, visuals and surrounded by nature.”

“Loved the way the artist mixed art and science and a message of hope.”

“Beautiful calming visuals and sounds – an immersive experience. Thought provoking too.”

“I enjoyed the peaceful nature of the exhibition – very mindful, beautiful and optimistic space.”

“I loved this, beautiful sounds and imagery of nature and such an inspiring project giving hope.”

In addition, my audiovisual artwork was accompanied by two educational elements (property of the National Trust): VR headsets and an interactive screen providing virtual tours of the nearby landscapes and habitats. It was fascinating to watch how different people interacted with the different elements of the exhibition. For example, who sat down and watched the projected artwork first and who headed directly for the VR headsets or interactive screen?

(Incidentally, these educational, immersive experiences were produced by View it 360 and I took the opportunity to talk to the company director about potential solutions to my VR180 filming issues, which was incredibly helpful.)

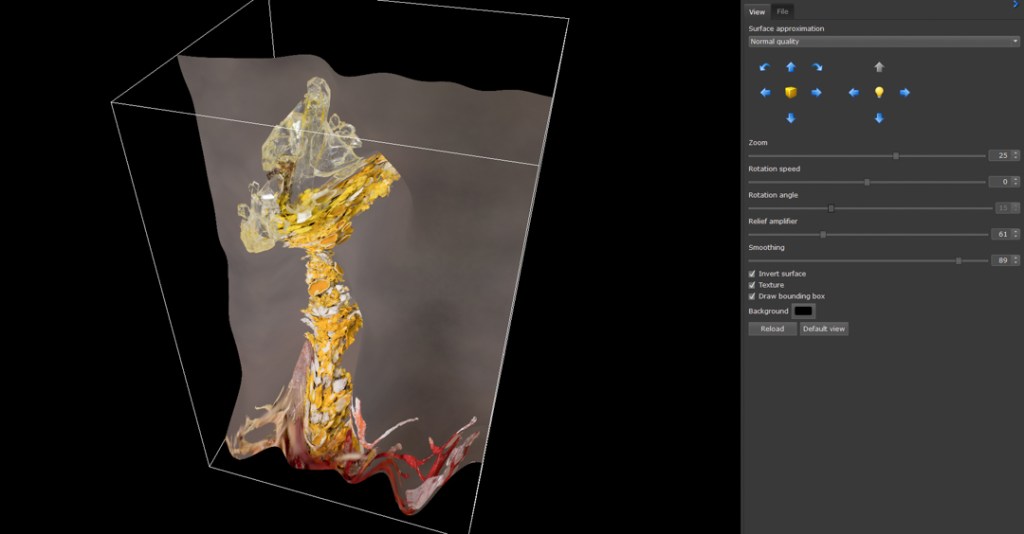

I’ve been experimenting with different ways of creating 3D models of my crystallised lichens. The first method I tried was reconstructing the 3D surface from a depth map – using a recent focus-stacked image – in Helicon Focus. This gave me some very strange results (see yellow specimen below) so I quickly progressed to photogrammetry.

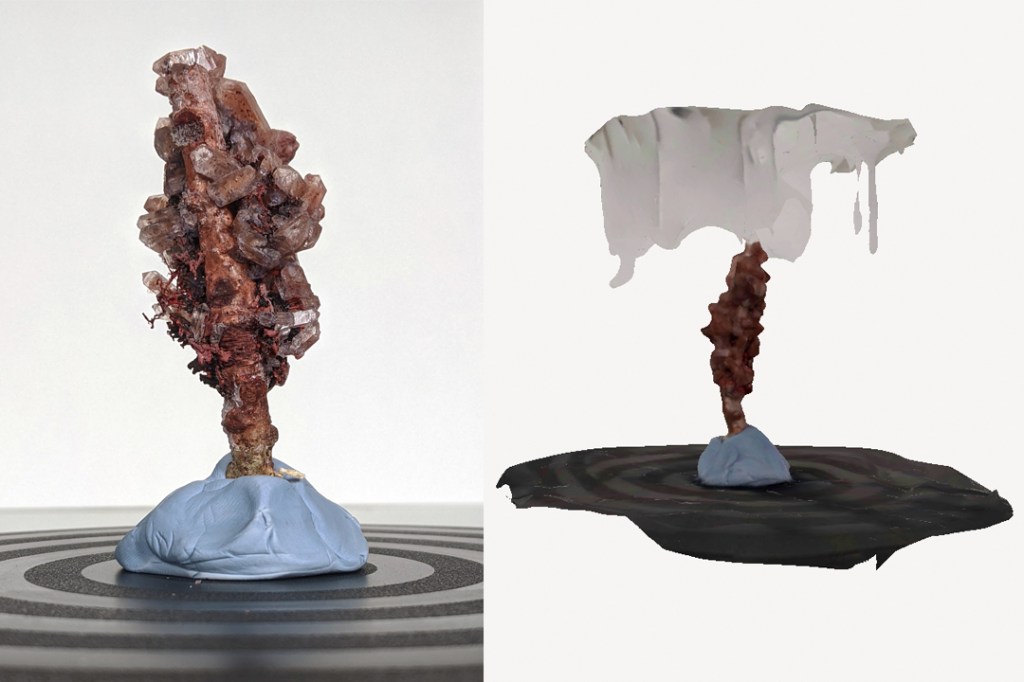

The next photograph includes two images side by side. The image of the left, shows the specimen on a small turntable in my studio while the image on the right shows the 3D model created using Polycam on my mobile phone. The 3D scanning app seemed to struggle with the turntable and it also fused part of the white background to the specimen.

By far the most successful 3D model was the one created using my professional camera and taking 102 raw images of the subject. I then processed those images in RealityScan (which fortunately, has some very helpful tutorials available) and I was very impressed with the outcome.

I’ve been wanting to see Marshmallow Laser Feast’s artwork in person for a long time and I finally got the opportunity to see their Of the Oak installation at Kew Gardens. The immersive artwork combines interactive video, multichannel audio and an online field guide to tell the story of the Lucombe Oak.

Thanks to Daniel Birch (creative technologist and sound artist) and Somerset Film, I now know how to process MIDI data in Ableton Live. I’ve been putting my new skills to the test by synthesising music from three MIDI files I collected two years ago (of some crystals growing in lichen specimens) but wasn’t previously able to utilise. Here’s an example of some music I’ve synthesised from this MIDI data.

This means that I’m now in the position to take my audio experiments a step further and combine MIDI data with field sound recordings (using Ableton Live) in order to create a multichannel, environmental soundscape with a sense of space and distance. I’ve just done my first test of this using four portable speakers in my studio, and it sounds amazing!

My most significant purchase for this project was a Canon RF 5.2mm F2.8L Dual Fisheye Lens for stereoscopic video production. This is a highly specialised piece of equipment that I was lucky enough to find second hand. After checking, and manually adjusting, the focus difference between left and right lenses I set up the camera in my studio so that I could record my first VR video. Inevitably, there were some issues. The most significant of which, was the tripod legs (and dials on the tripod head) appearing in the 190° field of view. I’m now researching the best possible solution/s to this problem.

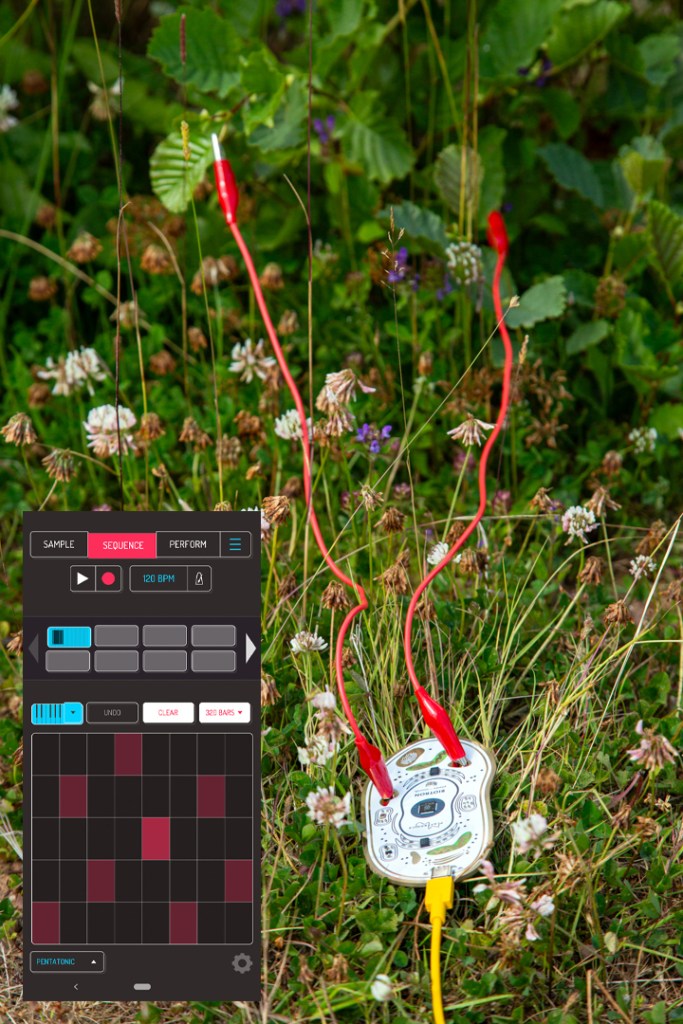

My Biotron MIDI controller finally arrived so I’ve been out and about testing it on local plants. It’s taken some effort to get everything set up successfully but now that it’s working, I’m collecting some really great data. At the moment, I’ve got the device connected to the Koala Sampler app on my phone. I think I’ll probably need to adjust the settings in order to increase the sensitivity – or perhaps the recent dry weather is having an effect on the variability of the data – but nevertheless, I’m enthusiastic about the results.

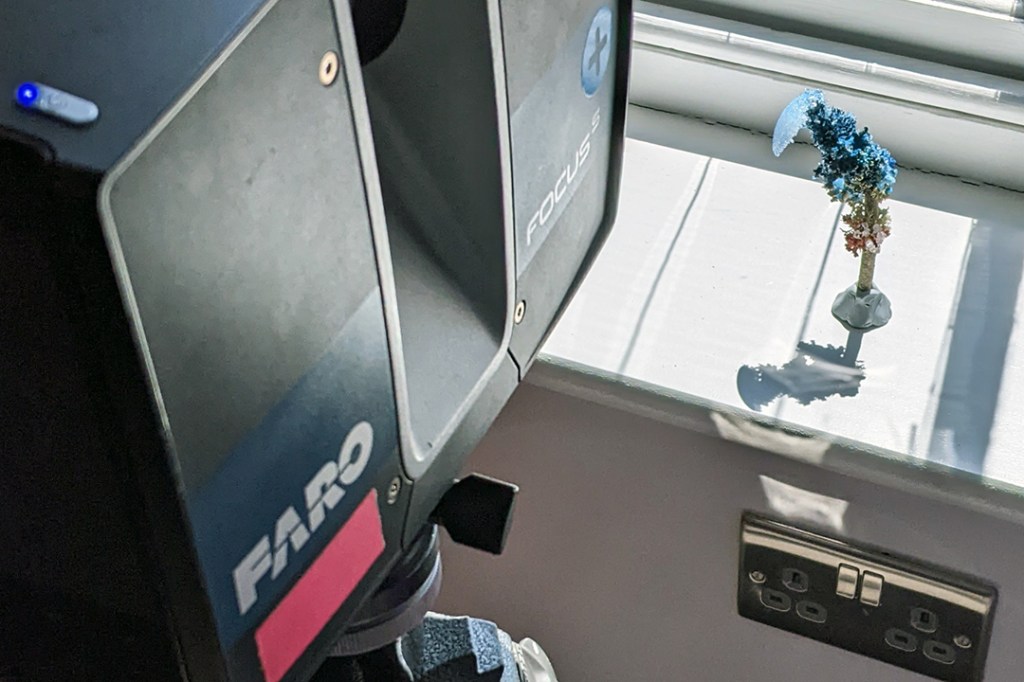

I thoroughly enjoyed my recent visit to Alice Ladenburg’s Lifescape exhibition at East Quay, Watchet. The installation combines LiDAR scanning, 3D landscape models, moving image and sound to showcase details on Somerset’s environment.

I’ve just completed my first Adobe After Effects online short course with UAL. This feels like a complete gamechanger for my creative practice! I’ve learned about key settings, the timeline, and working with compositions, as well as animating with keyframes and expressions. There’s a fair amount of overlap with programmes like Photoshop and Premiere Pro (which I’m already familiar with) so the learning curve hasn’t been too steep. Having said that, I still feel as though there’s plenty to learn so I’ll be doing a lot of practice in the run-up to my next training course.

I’ve been very busy lately making field sound recordings of nonhuman sounds (e.g., water, wind and birdsong). I’m planning to use these mono and stereo audio files as the basis for a multichannel, environmental soundscape.

I recently attended a wonderful workshop ‘Creative 3D LiDAR Scanning’ run by ScanLAB Projects and hosted by Somerset Film. The event was designed for artists looking to integrate 3D scanning into their creative practice as well as anyone with an interest in environmental and data science. The team demonstrated a FARO laser scanner and compared it with iPhone scanning. There was also the opportunity to scan one of my crystallised lichen specimens although sadly, my object was too small to be scanned successfully. On a more positive note, I received some really great advice about photogrammetry so I’m going to try that in my studio soon…

One of the first things that I wanted to explore during this project, was finding new ways of blending science with visual arts. I’m looking to metamorphose natural landscapes and thereby draw awareness to the role that humans perform in driving environmental change. To do this, I’ve been blending some of my existing photographic imagery together using Adobe CC (Photoshop, Premiere Pro and After Effects). Here’s a recent example that I think is particularly successful. It combines a photograph of mushrooms with another image of water dispersal patterns to create the effect of spores being released from psychedelic fungi.

Future ecologies: exploring multisensory, extended reality landscapes is supported by Immersive Arts funding, which is provided through a collaboration between the UKRI Arts and Humanities Research Council (AHRC), Arts Council England, the Arts Council of Wales (ACW), Creative Scotland and the Arts Council of Northern Ireland (ACNI). Funding from Creative Scotland, ACW and ACNI is provided by The National Lottery.

You must be logged in to post a comment.